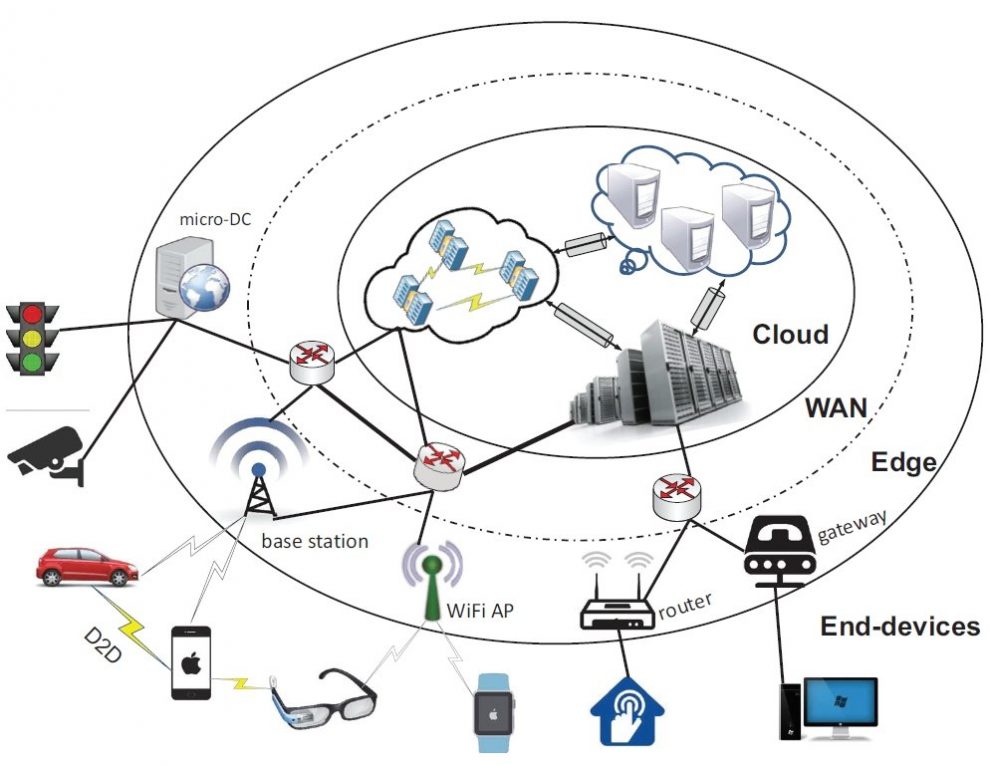

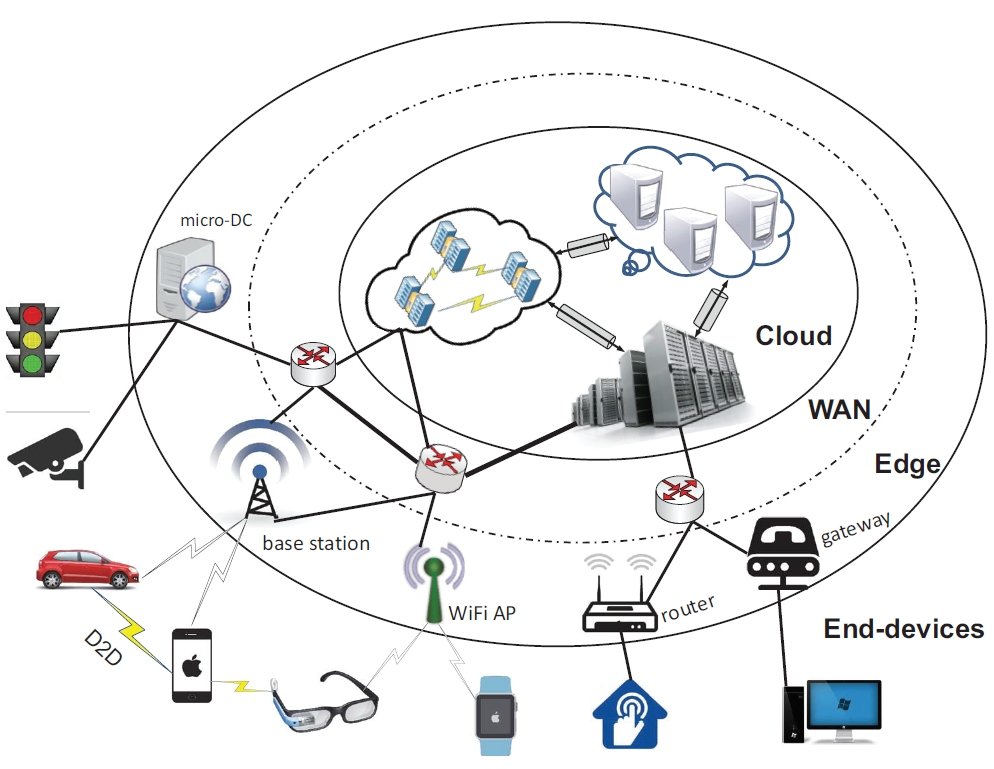

Equipping connected edge hardware with onboard intelligence is transforming landscapes from factories to cities. Processing locally avoids cloud latency while reducing communication costs. Distributed smart devices also continue functioning if networks go down. Artificial intelligence and machine learning drive responsive automation, analytics, and control at the true point of action. This article explores the approaches and impact of embedding intelligence within the growing Internet of Things (IoT).

Chips to the Edge

Delivering intelligence requires compute power. Microcontrollers (MCUs) provide flexible foundations for smart appliances to surveillance cameras. Leading MCUs now integrate ML accelerators to run neural networks efficiently. NXP’s i.MX RT series packs dedicated hardware for vision and inferencing into industrial IoT and embedded vision. Meanwhile, Arm offers its Ethos IP for partners to build custom ML chips. Adopting Ethos-U55 delivered over 4X efficiency gains for TensorFlow Lite models in CEVA’s NeuPro AI processors.

For more demanding workloads, System on Modules combine microprocessors, GPUs, and memory as plug-in accelerators. Hardware partners like Advantech, Aaeon, and Auvidea offer SOMs on NVIDIA’s energy-efficient Jetson platform. Its CUDA cores and deep learning libraries help developers readily deploy computer vision and AI at edges like robots, drones, and smart city infrastructure. Such modular designs shrink the effort to equip existing equipment with analytic capacities.

Even miniature ML accelerators maximize efficiency. Edge Impulse enables partners to rapidly deliver TinyML solutions utilizing under 1MB of data. For example, Anthropic integrated Edge Impulse-trained micronet models into its humanistic robot platform. Onboard audio sensing and inference helps navigate safely among people. Targeted ML acceleratesoreo Massive IoT.

Optimizing Neural Networks

While hardware improvements help, optimized neural networks are essential for responsive edge intelligence. According to Pete Warden of Google’s TinyML team, “It’s all about compression, compilation, and efficient runtimes.” TinyML techniques like weight pruning, quantization, and distillation shrink model sizes 10-100X with minimal accuracy loss. Compact networks run smoothly on microcontrollers with KB of memory.

Startups like Syntiant and Blaize specialize in shrinking neural networks for edge devices. Syntiant’s deep compression better utilizes each weight while accommodating diverse activation functions. Their NDP120 vision AI Processor achieves over 20x efficiency gains versus GPUs. Meanwhile, Blaize Picasso boasts up to 90x improvements over CPUs for automotive, security, and industrial applications. Such optimized ML inference at under 1W empowers smarter, greener edge hardware.

Addressing Expanding Applications

Bespoke solutions allow tailoring intelligence to unique use cases. LatentAI’s foundation SDK helps developers quickly train and optimize custom vision AI for clients from fauna monitoring to molecular imaging. Vertical integration improves performance and usability. As CEO Jags Kandasamy notes, “Purpose-built tools give smaller teams the same capabilities as tech giants, democratizing the benefits for more organizations.”

In healthcare, chronic disease management relies on intelligent wearables and sensors. Startup physIQ developed an end-to-end solution integrating its PinpointIQ biosensing platform with edge gateways and analytics. On-body nodes capture and process vital sign data before transmitting actionable patient insights securely to the cloud. Such edge intelligence improves outcomes while maintaining privacy.

Agriculture is similarly adopting smart sensing. Harv calls its equipment-mounted cameras and AI “the eyes in the skies for tractor drivers.” The edge vision system guides harvesting equipment over crops, even with obstructed views, preventing losses. Such incremental intelligence accrues into massive economic impacts across industries as organizations leverage and build expertise.

Reclaiming Data Sovereignty

Beyond technical benefits, distributed intelligence addresses growing concerns over data privatization. Transferring huge data volumes outside facilities relinquishes control and incurs liability. Edge AI circumvents this while tapping insights within operations securely.

Manufacturing firm OnRobot equips its cobot arms with edge ML accelerators for real-time vision. Video stays on-premise while only actionable signals reach the cloud. As CTO Jean-Philippe Jobin explains, “Edge intelligence keeps our customers’ production data private and secure.” Such capabilities help factories gain automation without compromising proprietary information.

Similarly, new standards like imec’s ML FPGA allow pretrained models to execute inside FPGAs owned by customers. Companies avoid handing over sensitive IP even when leveraging others’ algorithms. Imec’s anthology of optimized ML blocks gives adopters an extensible edge ML toolbox while protecting intellectual property. Democratized edge intelligence balances access and security.

Intelligence Where It Matters Most

In essence, embedding intelligence within connected devices rather than centralized servers unlocks responsiveness and privacy. MCUs, accelerators, and optimized networks now provide flexible foundations for context-aware automation across settings. Unlocking value from data where it originates improves outcomes while reducing risks. Edge intelligence marks a paradigm shift toward empowering stakeholders rather than relinquishing control.